A perspective on test-driven development

In this post we’ll take a look at a development guideline called test-driven development. To give the discussion a pratical perspective we’ll look at an example in embedded C. Embedded applications are notoriosly challening to test and debug. Here we use TDD framework for a implementing a simple data buffer structure.

In Theory

The paradigm

- Add a test

- Run all the tests

- Write some code

- Run tests

- Refactor code

- Repeat

Motivation

At first impression test-driven development (TDD) seemed like an excessive display of discipline in programming. Following the “the paradigm” would only to slow down the development process with a promise for “code that behaves the way it was designed to behave”. Every developer is optimistic about their own work so creating “code that works” seems like a silly motivation. Of course our code works!

Having debugged an embedded system with application logic and web API, and where no tests were defined the situation became a nightmare when strange bugs appeared. Our team of 3-4 specialists ended up spending two weeks on a bug that had to do with microSD card I/O operations. In the end the reason was a use of outdated software drivers. Using a new type of sd card caused compatibility issues because of the cards larger memory capasity. Now that some time has passed since then I realize this was a big rookie mistake. The whole situtation could have been avoided if there were a tailored test suite for the sd card I/O operations…

TDD in context

Software testing can strike a beginning developer as tedious, confusing, even redundant process. Using test suites with unit and integration testing for a smaller codebase is often times an oversized decision. But as codebase grows in size a systematic approach to testing becomes relevant. Test suites are invaluable when a big codebase is under stress test to avoid the nightmare of bug tracking with zero context of the systems state.

Software testing is quite different in practise dependeing on the used programming language and testing framework. With a interpreted language and a framework (take Python and pytest for example) situations that require use of debugger are mainly unhandled exceptions and contradictions in design by contract. In a bare-metal environment with no OS the possible issues become more convoluted. A single failed pointer operation may lead to undefined system behaviour and following debug process time can be hard to predict. This doesn’t mean that we should only use TDD in a single context an nowhere else (there is a book on Test-driven Development in Python).

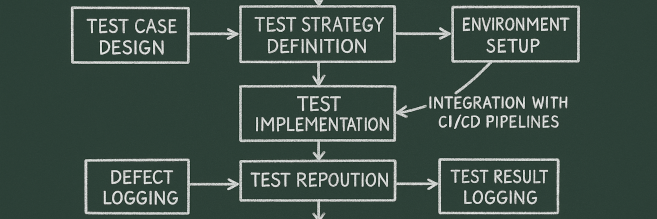

When a developer first enters their first career there are deadlines and waiting customers who want to see everything working as they want. There is a feeling of rush and getting things done on time. The rush rarely contributes to software quality neither in design or implementation. In worst case taking shortcuts increments technical debt and makes codebase maintanence challenging. Well defined software development process, including testing routines, code acceptance, possible CI/CD pipeline and a good team culture a codebase remaings under control.

TDD introduces realiability in existing codebase. When I saw the paradigm of TDD, I pictured a Venn diagram - of course. Put in one way the test suites set an outer bound for the codebase. Put yet another way the codebase has no more functionalities than what is determined by the test suites. Picture what this implicates. All of the codebase is tied to a set of test suites that scream if something breaks down (yes, tests are said to scream when they break). Now if one team member would be eager to write production code before creating tests the codebase would have more fuctionality than what the tests cases determine. If, and when, things go south the tests suites are there to point where the possible bugs originate from.

In practise

TDD framework in embedded C: ceedling

In ceedling we create test suites that confirm the behaviour of our production code. The framework is specially meant to test application level code. The tests are compiled and run on the host, not on the target device. For this reason peripherals should be mocked so that they can be integrated to tests. Ceedling has support for VSCode environment. If we were not thinking in terms of TDD we would start by defining a data structure like such:

enum buffer_operations {

BUFFER_OK,

BUFFER_ERR

}

struct my_buffer {

uint32_t *head;

uint32_t buffer_array[BUFFER_LENGTH];

uint32_t BUFFER_LENGTH;

}

typedef struct my_buffer buff;

and then proceed to create the function API

buff buffer_create_buffer(uint32_t buffer_lenth);

buff buffer_init_buffer(uint32_t buffer_lenth);

int32_t buffer_push(buff* b, uint32_t item);

int32_t buffer_pop(buff* b, uint32_t *dest);

bool buffer_is_full(buff* b);

bool buffer_is_empty(buff* b);

For the sake of readability the buffer_ prefix is added to all functions to tell what source file the function call is defined in. This looks good yes. We then construct the implementations and create a test to vefiry our data structure works.

What we have done is actually part of step 3 in TDD: “Write some code” and we have completely skipped the steps 1 and 2.

Step 1: “Write a test”

Instead we should think of the API design before writing any code. The API design can be expressed with a collection of tests. For out buffer example it would look something like this:

testIsBufferCreated()testIsBufferEmpty()testIsBufferFull()testWasHeadIncremented()testWasHeadDecremented()testAddingToUnitializedBufferFails()testAddingToFullBufferFails()

I want to pause to think what we did. At this moment if there were any missing features from the data structure definition it would be very easy to add them in. Our buffer here is quite simple. Now what if we wanted to have a buffer that could take in multiple items at once? What if we try to add \(n\) items when there is not enough space in the buffer. Do we want to keep the first \(m\) items, when \(m\) is the remaining space and \(n>m\).

testAddMultipleWasHeadIncremented()testAddMultipleBufferOverflowFails()

Again we might want to be able to pop multiple items at once. Then we follow te same steps as before: “Write a test”. You get the idea. It is preferable to write down all the tests you can come up with.

We start to see the API taking shape. At this point we can start to think how other system components interact with this data structure. We could also delegate this list of tests to a team of coders who can give a full focus on the implementation.

Step 2: “Run all the tests”

You should first make all test return an error. I know this may sound confusing but this way we see that all our tests fail and we focus on making them pass one by one. In VSCode the test have a indicator in the menubar that show passing and failing tests.

Our buffer can be implemented in less than an hour so doing it this way is a clear overkill. Now imagine a larger database with tens of components. Try not to think of tests as a sign that something is not working but instead as a sign of progress. Once a given test suite passes the component is done! When a superior asks on the state of some project you can now say “67% of all tests pass” instead of “we’re getting there”.

Step 3: “Write some code”

We would then proceed to write production code until…

Step 4: “Run test”

our tests pass. After this some might say that we have finished and should move on to something else.

Step 5: “Refactor code”

At this step our code should run and other people should be able to use it. In this step we focus on refactoring the code with a specific focus in mind: readability, performance, maintainability. We basically rewrite existing code to make it better. Once this is done we rerun our tests and if all tests pass our code still works as expected.

Step 6: “Repeat”

We follow this pattern for all of our codebase.

Conclusion

The implemented tests become a rudimentary process in software development. Since nothing should be taken for granted in embedded design all hardware should go through test suites.